* – This article has been archived and is no longer updated by our editorial team –

Below is our recent interview with Steve Fox, VP Marketing at Unreal Streaming Technologies:

Q: Just about anyone offers OTT services nowadays. How is your offering different?

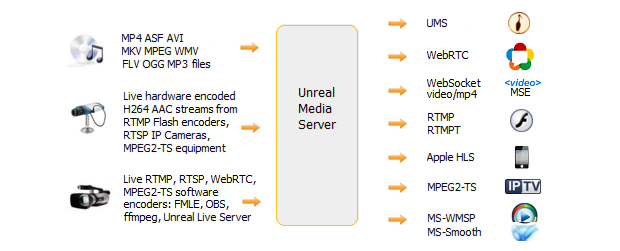

A: We don’t actually offer OTT services per se. I mean we do offer AWS subscription for Unreal Media Server, and one can certainly use it for OTT, as Unreal Media Server supports HLS, which is the streaming mechanism for OTT. But our HLS has nothing special, and OTT was never our primary specialization, so I don’t think we are in the OTT market at all. Unreal Media Server and rest of our software is optimized and fine-tuned for low latency live streaming, not needed by OTT. The only reason our customers still sometimes use our HLS is – HLS is playable on all devices and browsers. But that’s changing now with WebRTC that is also supported everywhere nowadays. So majority of our customers come from other markets than OTT, the special niche markets that need low latency live streaming.

Q: So what niche markets use streaming software from Unreal Streaming Technologies?

A: IP video surveillance is quite big, for public transportation, construction sites, police and security projects. They cannot tolerate 10-30 seconds latency typical for HLS or Dash streaming. Live Internet auctions, game streaming and one-to-many video conferencing webinars have even more aggressive latency requirements – they need less than a second latency. Service providers from these markets normally try a lot of different software before they find ours, or even try to develop their own software, but once they found us, that’s typically the end of their research – they love the stability, scalability and feature set of our streaming software. Stability and performance is crucial for these types of applications; lots of quickly-cooked software packages offer similar feature set, but they crash, monopolize CPU or drop connections when serving hundreds or even dozens of concurrent players, or when receiving multiple concurrent streams published by IP cameras or WebRTC in browsers; they don’t cope well with various edge-case network circumstances such as instant loss of connectivity or high jitter. It’s our 15 years-old server expertise that makes the difference for these customers.

Recommended: Personalized Proactive Health Platform iamYiam Empowers People To Take Charge Of Their Health

Recommended: Personalized Proactive Health Platform iamYiam Empowers People To Take Charge Of Their Health

Q: In your previous interview with us about two years ago, your engineer was skeptical about WebRTC adoption in streaming ecosystem. Apparently you guys have changed your mind now?

A: Two major events happened since then, which completely changed the game and removed last obstacles from WebRTC’s way to become a new standard for near real time streaming over IP: first – H264 codec was completely adopted by WebRTC; second – Apple added full WebRTC support on iOS in it’s Safari browser. A third, less crucial, but also important event was addition of WebRTC support to Microsoft Edge browser. Without these things, WebRTC would remain a marginal protocol used here in there in specific apps. Now, of course, we faced the reality, and added full WebRTC support to Unreal Media Server and VP8/VP9/Opus encoding support to Unreal Live Server. All high quality H264 streams sent to Unreal Media Server from IP cameras and hardware encoders, can now be played by browsers via WebRTC; browser-encoded H264 streams can be published to Unreal Media Server and sent to other browsers, set-top-boxes, HLS or any other player. We have released this new version in May 2018 and over the summer have seen immediate adoption by our customers – time has come for WebRTC, no doubt.

Q: It’s a popular but quite obscure saying that WebRTC media servers don’t scale well. Can you please elaborate on the subject?

A: Well, that is true and not true. Part of the myth comes from the fact that Google’s open source WebRTC implementation is highly inefficient for use in media servers and gateways. It is a monstrous c++ project, very much over-architected, with millions lines of code. It is designed for use in web browsers that normally run only a few concurrent WebRTC sessions. Now, if you apply the same code to the media server and run a hundred of concurrent WebRTC sessions, you notice that you are at 100% CPU usage. Hence, the scalability issues – a single server cannot support hundreds of sessions; you need a farm or cloud of servers to do that. Each WebRTC session uses enormous amount of CPU, about 10 times more than an RTMP session. Some of that overhead is inherent to WebRTC because it must encrypt all data going to the wire. Therefore, WebRTC session is by definition more computationally expensive than RTMP session. However, large part of that overhead comes from very inefficient and over-architected Goggle’s implementation of WebRTC session. The c++ classes and functions there perform unnecessary high amount of processing and do a lot of stuff that is not needed for media server, such as statistics calculation, logging etc.

Each WebRTC session in Google’s implementation uses 3-4 execution threads, which is OK if you have a few sessions, but is an overkill for hundreds of sessions. Long story short – if you just take Google’s implementation then your server or gateway is not going to be very performant and scalable. But! – If you implement WebRTC by yourself, or if you highly optimize Google’s code (both of these tasks are very hard), then your WebRTC server can be almost as scalable as RTMP server and you can support a thousand of WebRTC sessions on a single server.

Q: As a specialist in low latency streaming to Media Source Extensions and WebRTC, what do you think of maturity and readiness of video MSE and WebRTC in browsers for commercial video applications?

A: OK, the situation is far from perfect. I would say we are almost there with WebRTC, but far from there with MSE. Let us first talk about MSE. Media Source Extensions work very well for HLS or Dash; you know that popular video players like flowplayer or JW player use Media Source Extensions in browsers for HLS playback. That is today 99% use of MSE. For 5-10 seconds long video segments, typical to HLS, Media Source Extensions work very consistently across browsers and devices. But, if you read the MSE spec, you find out that MSE was designed to facilitate many other uses: timeshift, where you could seek back in live stream, pause and jump back to real-time, using a buffer in the browser; playlists allowing to seamlessly switch between different content; shorter segments for lower latency.

All these features are in the spec for years. Nevertheless, they do not work well and/or consistently across different browsers and devices. In addition, MSE is not supported at all on iOS. Thanks a lot, Apple. Overall, this situation mostly restricts the MSE use to HLS/Dash, although it may work well for low latency streaming on a specific browser with specific content. Now, about WebRTC. Supported in all major browsers and devices and working quite consistently across these, WebRTC is a more reliable choice for low latency service providers. The only real issue is codec compatibility: different browsers and devices decide to support only certain codecs. That is a biggie. Safari in iOS does not support VP8/VP9. Chrome on Androids does not support VP9. Encoding H264 high profile is not supported on iOS or Androids, while decoding works perfectly. Also, b-frames in H264 stream are not supported – all high quality video encoders use b-frames in H264 high profile. You must turn b-frames off for WebRTC playback. In summary, depending on browser/device compatibility requirements, a service provider needs to use different video codecs for WebRTC playback. Apart from that and scalability issues discussed previously, WebRTC is quite mature today.

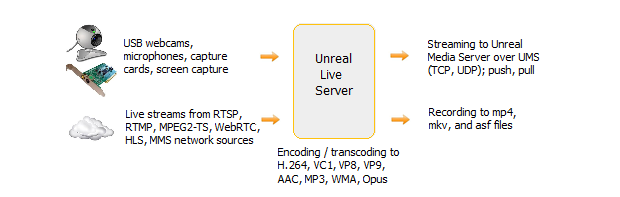

Q: You have your own live encoder named Unreal Live Server. Why would someone want to use it?

A: Unreal Live Server is quite a unique encoder, designed to encode multiple concurrent a/v sources at the same time. It does not have any user interface apart from configuration GUI where you configure your live sources and encodings. Then you close the GUI and leave. It runs as a Windows Service and starts encoding once it receives a command from Unreal Media Server. So, unlike traditional encoders, it does not encode and push the stream all the time. If there is no active viewers or recorders, it does nothing. Big bandwidth savings there. In addition, Unreal Live Server can encode with user-supplied codec, it is not limited with built-in codecs. Lastly, it is the lowest latency live encoder on the market; when you use VP9/Opus codecs and play with WebRTC in browser, your end-to-end latency is 200ms.

Recommended: TeleSense Closes $6.5M Series A Funding Round To Help Manage Environmental Risks In The World’s Food Supply Chain

Recommended: TeleSense Closes $6.5M Series A Funding Round To Help Manage Environmental Risks In The World’s Food Supply Chain

Q: What can we expect from Unreal Streaming in the future?

A: Well, we will continue to be innovative and stand at the cutting edge of video streaming technology. Our spirit is more like a software workshop than a company, but we are trying to combine these. We will continue to improve our own UMS streaming protocol and of course, will comply with new streaming protocols and codecs arising on the horizon – that is always the case.

Activate Social Media: